Many organizations operate under the assumption that secure, frequent configuration backups guarantee recovery. In theory, having the data should resolve failures. But the reality is more sobering.

Imagine this: Your network infrastructure has all its configurations backed up. So why is the network still down?

It’s a scene that plays out too often in network operations rooms. The scheduled config backup ran without a hitch, yet somewhere behind the scenes, a misconfiguration remains hidden. Engineers scramble to identify which saved configuration state to roll back to, while the users’ patience wears thin. This is a crisis of uncertainty.

Why Backups Aren’t Enough

Enterprises invest heavily in tooling for backing up router, switch, firewall, and load balancer configurations, assuming these backups will carry them through any failure scenario. And while config snapshots are undeniably essential, they are only one piece of a much more complex recovery puzzle.

According to a 2023 Uptime Institute survey, over 50% of major outages cost more than $100,000, and 16% exceeded $1 million, with configuration-related errors frequently implicated. In most cases, it’s the lack of context about which backup is correct, why it should be restored, and whether it aligns with the current operational state, that slows recovery. The harsh truth is that having a config.txt file means little if it’s disconnected from the present-day infrastructure.

Misconfiguration: The Hidden Threat

Most traditional network recovery plans still assume the most recent config snapshot is the best option. But in today’s environments where service dependencies evolve constantly, this logic no longer holds. Misconfigurations often stem from untracked or improperly validated changes. And without strong change management practices or a live, accurate CMDB, these saves configurations lack the context needed for reliable recovery. A switch config from last week might no longer support a firewall rule updated yesterday. You can’t restore what you don’t fully understand.

Gartner estimates that up to 99% of firewall breaches and outages in network infrastructure are due to misconfigurations, not hardware failure (Gartner, 2023). That staggering figure shifts the focus to how well your systems understand what they’re capturing, and whether those configuration states are still valid in the live network state.

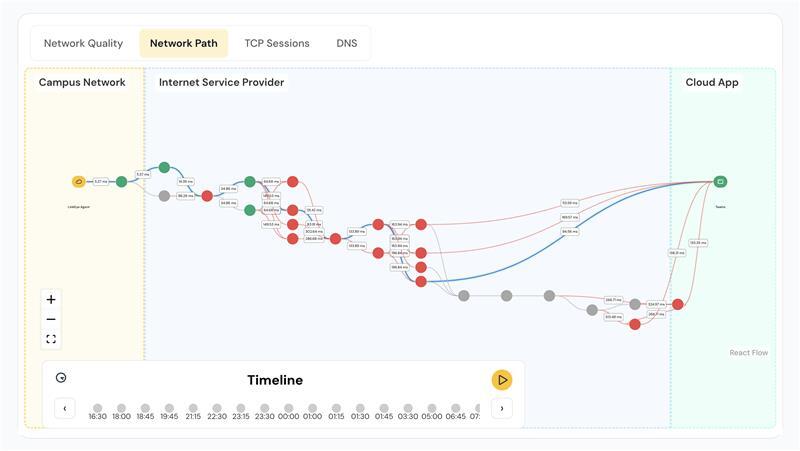

Effective network state recovery requires systems to understand the current operational baseline, validate whether a configuration still applies, and identify any changes across compute, network, or security layers that might compromise the integrity of a restore.

Tool Sprawl and Fragmentation

The insight gap widens further when systems are fragmented. Organizations often use multiple tools for monitoring, logging, configuration archiving, and change detection. A Grafana Labs survey indicates that 52% of companies use six or more observability tools, and 11% use more than sixteen, often managing data from over ten sources.

These environments create silos that slow incident response, complicate change correlation, and increase operational cost. Rather than reinforcing recovery, the saved configuration becomes just another isolated artifact in a disjointed workflow.

In the absence of integrated change management and an up-to-date CMDB, teams struggle to trace which change triggered an outage, or whether a restored configuration reintroduces conflict. Tool sprawl is a natural outgrowth of fragmentation and compounds the visibility gap. The average enterprise uses between 20 to 49 observability tools, according to IDC (IDC Observability Trends, 2023). Each additional tool introduces potential for overlap, delay, or even blind spots during incident response. Worse, teams spend more time managing tools than managing the network itself.

The Solution: Intelligent Observability

Networks are dynamic. What was once a stable baseline may drift as systems evolve. Without adaptive modeling, observability tools generate more noise than insight, exhausting teams and delaying meaningful action.

Modern platforms address this by providing intelligent observability and learning normal behavior patterns per node, service, and device, distinguishing between benign drift and real anomalies, and reducing alert fatigue so teams can act faster and with more precision.

Context-aware or “diff-aware” backups allow teams to roll back only the exact changes that caused issues, instead of reverting entire environments and introducing regressions. This evolution in network intelligence shifts the entire goal from recovery to prevention.

Some AI-powered systems are already beginning to analyze configuration files, change tickets, and network state, correlating those changes across infrastructure layers and flagging misalignments before they result in outages. They go beyond static snapshots by embedding awareness of config drift and dependencies. But restoration alone isn’t the end goal, user validation is just as critical.

A configuration may restore connectivity, but the real measure of reliable network state recovery is when users experience normalcy. User experience probes act as crucial post-recovery indicators, measuring performance and behavior from the user’s perspective, and help quantify whether the network has truly returned to an acceptable state.

Systems that incorporate user validation ensure recovery efforts actually resolve the problem as experienced by users. That distinction is critical to knowing when the network is truly back to a stable state.

When capabilities like configuration awareness, misconfiguration detection, and user-validated network recovery come together in a unified ecosystem, they deliver more than isolated tools every could. Backups remain foundational, but they’re incomplete without insight, cross-domain visibility, and intelligent AI that connects everything in context. Platforms like LinkEye, which unify observability and configuration intelligence, are helping teams close the gap between detection and resolution.

A good backup restores a system. A great system prevents the need for restoration. Real resilience is when the network adapts instantly, protects the user experience, and recovers without guesswork. That’s what true operational maturity looks like, and it’s where smart observability is taking us.