“Everything is operating as expected.”

Every CIO has heard those words, often just before things go sideways.

In today’s hybrid, multi-vendor networks, the real threats rarely announce themselves. They slip in quietly like a device drifting away from its baseline configuration, an undocumented ACL change, or a minor configuration drift hidden deep within a network device. On their own, they look harmless. But over time, they ripple through dependencies, shifting baselines until a critical service suddenly falters.

It’s no coincidence that many major outages can be traced back to such invisible changes. BackBox research suggests that around 45% of network-related outages stem from configuration management failures, errors that went unnoticed until they cascaded. And according to Uptime Institute, four in five organizations believe their most recent serious outage could have been prevented with better configuration management and processes.

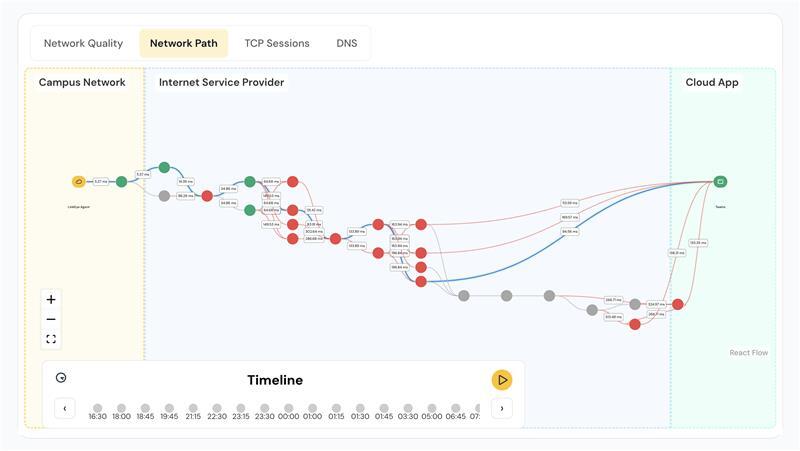

The question isn’t whether change is happening in your network, it’s whether you can see it in time. Traditional monitoring excels at flagging what’s already broken. But the industry’s focus is shifting toward observability that can surface the subtle, contextual signals and toward AI-powered analysis that can interpret them before risk turns into downtime.

Why Invisible Changes Are So Dangerous

Network operations teams don’t lack data. They’re flooded with logs, alerts, SNMP traps, syslogs, and packet captures. But when outages happen, the root cause often traces back to a missed connection that went unnoticed until it snowballed.

In single-vendor environments, this drift might be easier to catch, but across multi-vendor, API-driven, cloud-and-edge networks, the complexity multiplies, and its cumulative effect is instability. IBM estimates the average cost of downtime at over $7,500 per minute, and such outages stall revenue, erode customer trust, drain engineering time and extend mean time to resolution (MTTR).

From Observability to Predictive Hygiene

Observability is foundational to the future of network management as it stitches together a coherent narrative of the network’s state across layers, vendors, and environments. Done right, observability exposes not only what changed but why it matters. And the opportunity lies in evolving toward predictive network hygiene to proactively reduce the risk of outages before they manifest. That means:

- Baselining what “healthy” looks like across configurations, versions, and dependencies.

- Detecting drift the moment it starts, even before it affects users.

- Prioritizing remediation based on business impact, not just technical severity.

This shift is already underway among leading infrastructure teams, enabled by the intersection of observability and AI.

The Role of AI: Seeing What Humans Can’t

AI excels at pattern recognition at scale. It’s not a replacement for engineering expertise, but it can be configured to catch what’s otherwise undetectable. While a human might overlook a minor 0.2% routing anomaly, a machine learning model can correlate that deviation with historical trends, peer environments, or vendor-specific quirks. Already, we’re seeing AI used to:

- Identify config drift across diverse vendors.

- Detect unusual device behavior tied to firmware inconsistencies.

- Link minor changes to past outage patterns.

AI won’t auto-heal your network tomorrow, but it’s making the invisible visible. It helps teams act on weak signals before they become business problems.

The Future-Ready Network Hygiene Playbook

You don’t need to overhaul your stack to reduce risk today. Here are practical steps:

- Map the true baseline: Track config states, firmware versions, and known quirks across platforms.

- Correlate config and telemetry data: Not just device health, tie behavior to actual configuration changes.

- Rank risks by business impact: Not all drift is equal. Link changes to SLAs, SLOs, or compliance tiers.

- Pilot agentic workflows in safe domains: Pilot auto-ticketing or triage bots in non-critical areas. Prove value, build trust, then expand.

Where the past was reactive, the present is proactive, and the near future is predictive, allowing teams to spend more time architecting resilient systems, optimizing for scale, and aligning network performance with business outcomes. Thoughtful adoption of intelligent observability lays the groundwork for this shift, ensuring that when someone says, “everything is operating as expected,” leaders can trust it.